Thammasat University students who are interested in linguistics, political science, sociology, literature, philosophy, media and communication studies, and related subjects may find a newly available book useful.

Hate Speech: Linguistic Perspectives is an Open Access book, available for free download at this link:

https://www.degruyter.com/document/doi/10.1515/9783110672619/html

Its author is Professor Victoria Guillén-Nieto, who teaches linguistics at the University of Alicante, Spain.

The TU Library collection includes several books about different aspects of hate speech.

Online hate speech is a type of speech that has the the purpose of attacking a person or a group based on their race, religion, ethnic origin, sexual orientation, disability, and/or gender. Online hate speech is not easily defined, but can be recognized by the degrading or dehumanizing function it serves.

The publisher’s description of the book follows:

Hate speech has been extensively studied by disciplines such as social psychology, sociology, history, politics and law. Some significant areas of study have been the origins of hate speech in past and modern societies around the world; the way hate speech paves the way for harmful social movements; the socially destructive force of propaganda; and the legal responses to hate speech. On reviewing the literature, one major weakness stands out: hate speech, a crime perpetrated primarily by malicious and damaging language use, has no significant study in the field of linguistics. Historically, pragmatic theories have tended to address language as cooperative action, geared to reciprocally informative polite understanding. As a result of this idealized view of language, negative types of discourse such as harassment, defamation, hate speech, etc. have been neglected as objects of linguistic study. Since they go against social, moral and legal norms, many linguists have wrongly depicted those acts of wrong communication as unusual, anomalous or deviant when they are, in fact, usual and common in modern societies all over the world.

The book analyses the challenges legal practitioners and linguists must meet when dealing with hate speech, especially with the advent of new technologies and social networks, and takes a linguistic perspective by targeting the knowledge the linguist can provide that makes harassment actionable.

The book begins:

The meaning of the term hate speech is rather opaque, although, at first sight, it gives the opposite impression. Looking at the semantics of its constituent parts – that is, hate and speech – one may think that the term describes a subcategory of speech associated with the expression of hate or hatred towards people in general. However, we know by experience that its use is neither limited to speech nor to the expression of hatred. We also know that the target is not the general public but, instead, members of groups or classes of people identifiable by legally-protected characteristics, such as race, ethnicity, religion, sex, sexual orientation, disability,1 amongst others. Therefore, the meaning of hate speech is not a function of the literal meanings of its constituent parts. On the contrary, it has multiple meanings, as suggested by Brown. The social phenomenon and the legal concept of hate speech are necessarily intertwined. As a social phenomenon, hate speech fuels broad-scale conflicts that may cause a breach of the peace or even create environments conducive to hate crimes. As a legal concept, hate speech is an abstract endangerment statute because it punishes not actual but hypothetical creation of social risk, and must find a balance between the fundamental rights to freedom of opinion and expression and dignity. The analysis of hate speech is well documented in law, sociology and media and communication studies.

According to the website of the United Nations,

In common language, “hate speech” refers to offensive discourse targeting a group or an individual based on inherent characteristics (such as race, religion or gender) and that may threaten social peace.

To provide a unified framework for the United Nations to address the issue globally, the UN Strategy and Plan of Action on Hate Speech defines hate speech as…“any kind of communication in speech, writing or behaviour, that attacks or uses pejorative or discriminatory language with reference to a person or a group on the basis of who they are, in other words, based on their religion, ethnicity, nationality, race, colour, descent, gender or other identity factor.”

However, to date there is no universal definition of hate speech under international human rights law. The concept is still under discussion, especially in relation to freedom of opinion and expression, non-discrimination and equality.

While the above is not a legal definition and is broader than “incitement to discrimination, hostility or violence” – which is prohibited under international human rights law — it has three important attributes:

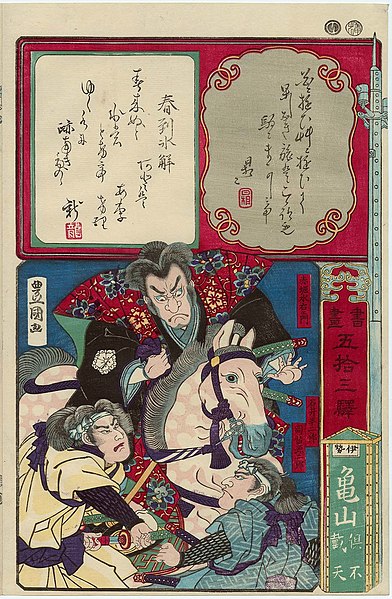

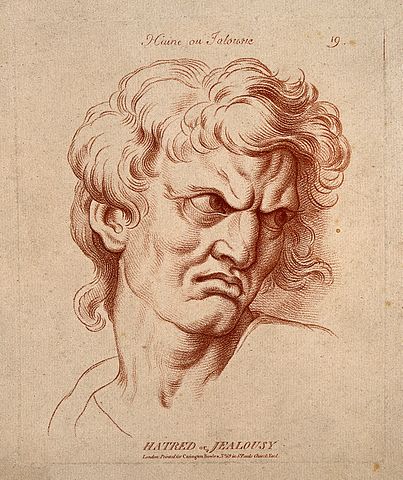

- Hate speech can be conveyed through any form of expression, including images, cartoons, memes, objects, gestures and symbols and it can be disseminated offline or online.

- Hate speech is “discriminatory” (biased, bigoted or intolerant) or “pejorative” (prejudiced, contemptuous or demeaning) of an individual or group.

- Hate speech calls out real or perceived “identity factors” of an individual or a group, including: “religion, ethnicity, nationality, race, colour, descent, gender,” but also characteristics such as language, economic or social origin, disability, health status, or sexual orientation, among many others.

It’s important to note that hate speech can only be directed at individuals or groups of individuals. It does not include communication about States and their offices, symbols or public officials, nor about religious leaders or tenets of faith.

The growth of hateful content online has been coupled with the rise of easily shareable disinformation enabled by digital tools. This raises unprecedented challenges for our societies as governments struggle to enforce national laws in the virtual world’s scale and speed.

Unlike in traditional media, online hate speech can be produced and shared easily, at low cost and anonymously. It has the potential to reach a global and diverse audience in real time. The relative permanence of hateful online content is also problematic, as it can resurface and (re)gain popularity over time.

Understanding and monitoring hate speech across diverse online communities and platforms is key to shaping new responses. But efforts are often stunted by the sheer scale of the phenomenon, the technological limitations of automated monitoring systems and the lack of transparency of online companies.

Meanwhile, the growing weaponization of social media to spread hateful and divisive narratives has been aided by online corporations’ algorithms. This has intensified the stigma vulnerable communities face and exposed the fragility of our democracies worldwide. It has raised scrutiny on Internet players and sparked questions about their role and responsibility in inflicting real world harm. As a result, some States have started holding Internet companies accountable for moderating and removing content considered to be against the law, raising concerns about limitations on freedom of speech and censorship.

Despite these challenges, the United Nations and many other actors are exploring ways of countering hate speech. These include initiatives to promote greater media and information literacy among online users while ensuring the right to freedom of expression.

(All images courtesy of Wikimedia Commons)